]]>

目的�Q�快速开发高性能、高可靠性的�|�络服务器和客户端程�?/p>

优点�Q�提供异步的、事仉���动的�|�络应用�E�序框架和工�?/p>

通俗的说�Q�一个好使的处理Socket的东�?/p>

如果没有Netty�Q?/p>

�q�古�Q�java.net + java.io

�q�代�Q�java.nio

其他�Q�Mina�Q�Grizzly

��Z��么不是Mina�Q?/p>

1、都是Trustin Lee的作品,Netty更晚�Q?/p>

2、Mina���内核和一些特性的联系�q�于紧密�Q���得用户在不需要这些特性的时候无法脱���,相比下性能�?x��)有所下降�Q�Netty解决�?ji��n)这个设计问题�?/p>

3、Netty的文档更清晰�Q�很多Mina的特性在Netty里都有;

4、Netty更新周期更短�Q�新版本的发布比较快�Q?/p>

5、它们的架构差别不大�Q�Mina靠apache生存�Q�而Netty靠jboss�Q�和jboss的结合度非常高,Netty有对google protocal buf的支持,有更完整的ioc容器支持(spring,guice,jbossmc和osgi)�Q?/p>

6、Netty比Mina使用��h��更简单,Netty里你可以自定义的处理upstream events �?�?downstream events�Q�可以��用decoder和encoder来解码和�~�码发送内容;

7、Netty和Mina在处理UDP时有一些不同,Netty���UDP无连接的�Ҏ(gu��)��暴露出来;而Mina对UDP�q�行�?ji��n)高�U�层�ơ的抽象�Q�可以把UDP当成"面向�q�接"的协议,而要Netty做到�q�一�Ҏ(gu��)��较困难�?/p>

Netty的特�?/p>

设计

�l�一的API�Q�适用于不同的协议�Q�阻塞和非阻塞)(j��)

��Z��灉|��、可扩展的事仉���动模�?/p>

高度可定制的�U�程模型

可靠的无�q�接数据Socket支持�Q�UDP�Q?/p>

性能

更好的吞吐量�Q�低延迟

更省资源

���量减少不必要的内存拯���

安全

完整的SSL/TLS和STARTTLS的支�?/p>

能在Applet与Android的限制环境运行良�?/p>

健壮�?/p>

不再因过快、过慢或���负载连接导致OutOfMemoryError

不再有在高速网�l�环境下NIO��d��频率不一致的问题

易用

完善的JavaDoc�Q�用��h��南和样例

����z�简�?/p>

仅信赖于JDK1.5

看例子吧�Q?/p>

Server端:(x��)

- package me.hello.netty;

- import org.jboss.netty.bootstrap.ServerBootstrap;

- import org.jboss.netty.channel.*;

- import org.jboss.netty.channel.socket.nio.NioServerSocketChannelFactory;

- import org.jboss.netty.handler.codec.string.StringDecoder;

- import org.jboss.netty.handler.codec.string.StringEncoder;

- import java.net.InetSocketAddress;

- import java.util.concurrent.Executors;

- /**

- * God Bless You!

- * Author: Fangniude

- * Date: 2013-07-15

- */

- public class NettyServer {

- public static void main(String[] args) {

- ServerBootstrap bootstrap = new ServerBootstrap(new NioServerSocketChannelFactory(Executors.newCachedThreadPool(), Executors.newCachedThreadPool()));

- // Set up the default event pipeline.

- bootstrap.setPipelineFactory(new ChannelPipelineFactory() {

- @Override

- public ChannelPipeline getPipeline() throws Exception {

- return Channels.pipeline(new StringDecoder(), new StringEncoder(), new ServerHandler());

- }

- });

- // Bind and start to accept incoming connections.

- Channel bind = bootstrap.bind(new InetSocketAddress(8000));

- System.out.println("Server已经启动�Q�监听端�? " + bind.getLocalAddress() + "�Q?nbsp;�{�待客户端注册。。�?);

- }

- private static class ServerHandler extends SimpleChannelHandler {

- @Override

- public void messageReceived(ChannelHandlerContext ctx, MessageEvent e) throws Exception {

- if (e.getMessage() instanceof String) {

- String message = (String) e.getMessage();

- System.out.println("Client发来:" + message);

- e.getChannel().write("Server已收到刚发送的:" + message);

- System.out.println("\n�{�待客户端输入。。�?);

- }

- super.messageReceived(ctx, e);

- }

- @Override

- public void exceptionCaught(ChannelHandlerContext ctx, ExceptionEvent e) throws Exception {

- super.exceptionCaught(ctx, e);

- }

- @Override

- public void channelConnected(ChannelHandlerContext ctx, ChannelStateEvent e) throws Exception {

- System.out.println("有一个客��L(f��ng)��注册上来�?ji��n)。。�?);

- System.out.println("Client:" + e.getChannel().getRemoteAddress());

- System.out.println("Server:" + e.getChannel().getLocalAddress());

- System.out.println("\n�{�待客户端输入。。�?);

- super.channelConnected(ctx, e);

- }

- }

- }

客户端:(x��)

- package me.hello.netty;

- import org.jboss.netty.bootstrap.ClientBootstrap;

- import org.jboss.netty.channel.*;

- import org.jboss.netty.channel.socket.nio.NioClientSocketChannelFactory;

- import org.jboss.netty.handler.codec.string.StringDecoder;

- import org.jboss.netty.handler.codec.string.StringEncoder;

- import java.io.BufferedReader;

- import java.io.InputStreamReader;

- import java.net.InetSocketAddress;

- import java.util.concurrent.Executors;

- /**

- * God Bless You!

- * Author: Fangniude

- * Date: 2013-07-15

- */

- public class NettyClient {

- public static void main(String[] args) {

- // Configure the client.

- ClientBootstrap bootstrap = new ClientBootstrap(new NioClientSocketChannelFactory(Executors.newCachedThreadPool(), Executors.newCachedThreadPool()));

- // Set up the default event pipeline.

- bootstrap.setPipelineFactory(new ChannelPipelineFactory() {

- @Override

- public ChannelPipeline getPipeline() throws Exception {

- return Channels.pipeline(new StringDecoder(), new StringEncoder(), new ClientHandler());

- }

- });

- // Start the connection attempt.

- ChannelFuture future = bootstrap.connect(new InetSocketAddress("localhost", 8000));

- // Wait until the connection is closed or the connection attempt fails.

- future.getChannel().getCloseFuture().awaitUninterruptibly();

- // Shut down thread pools to exit.

- bootstrap.releaseExternalResources();

- }

- private static class ClientHandler extends SimpleChannelHandler {

- private BufferedReader sin = new BufferedReader(new InputStreamReader(System.in));

- @Override

- public void messageReceived(ChannelHandlerContext ctx, MessageEvent e) throws Exception {

- if (e.getMessage() instanceof String) {

- String message = (String) e.getMessage();

- System.out.println(message);

- e.getChannel().write(sin.readLine());

- System.out.println("\n�{�待客户端输入。。�?);

- }

- super.messageReceived(ctx, e);

- }

- @Override

- public void channelConnected(ChannelHandlerContext ctx, ChannelStateEvent e) throws Exception {

- System.out.println("已经与Server建立�q�接。。。�?);

- System.out.println("\n误���入要发送的信息�Q?);

- super.channelConnected(ctx, e);

- e.getChannel().write(sin.readLine());

- }

- }

- }

Netty整体架构

Netty�l��g

ChannelFactory

Boss

Worker

Channel

ChannelEvent

Pipeline

ChannelContext

Handler

Sink

Server端核�?j��)�?/p>

NioServerSocketChannelFactory

NioServerBossPool

NioWorkerPool

NioServerBoss

NioWorker

NioServerSocketChannel

NioAcceptedSocketChannel

DefaultChannelPipeline

NioServerSocketPipelineSink

Channels

ChannelFactory

Channel工厂�Q�很重要的类

保存启动的相兛_���?/p>

NioServerSocketChannelFactory

NioClientSocketChannelFactory

NioDatagramChannelFactory

�q�是Nio的,�q�有Oio和Local�?/p>

SelectorPool

Selector的线�E�池

NioServerBossPool 默认�U�程敎ͼ�(x��)1

NioClientBossPool 1

NioWorkerPool 2 * Processor

NioDatagramWorkerPool

Selector

选择器,很核�?j��)的�l��g

NioServerBoss

NioClientBoss

NioWorker

NioDatagramWorker

Channel

通道

NioServerSocketChannel

NioClientSocketChannel

NioAcceptedSocketChannel

NioDatagramChannel

Sink

负责和底层的交互

如bind�Q�W(xu��)rite�Q�Close�{?/p>

NioServerSocketPipelineSink

NioClientSocketPipelineSink

NioDatagramPipelineSink

Pipeline

负责�l�护所有的Handler

ChannelContext

一个Channel一个,是Handler和Pipeline的中间�g

Handler

对Channel事�g的处理器

ChannelPipeline

优秀的设�?---事�g驱动

优秀的设�?---�U�程模型

注意事项

解码时的Position

Channel的关�?/p>

更多Handler

Channel的关�?/p>

用完的Channel�Q�可以直接关闭;

1、ChannelFuture加Listener

2、writeComplete

一�D�|��间没用,也可以关�?/p>

TimeoutHandler

]]>

]]>

前记

�W�一�ơ听到Reactor模式是三�q�前的某个晚上,一个室友突然跑�q�来问我什么是Reactor模式�Q�我上网查了(ji��n)一下,很多人都是给出NIO中的 Selector的例子,而且���是NIO里Selector多�\复用模型�Q�只是给它�v�?ji��n)一个比较fancy的名字而已�Q�虽然它引入�?ji��n)EventLoop�?念,�q�对我来说是新的概念�Q�但是代码实现却是一��L(f��ng)���Q�因而我�q�没有很在意�q�个模式。然而最�q�开始读Netty源码�Q�而Reactor模式是很多介�l�Netty的文章中被大肆宣传的模式�Q�因而我再次问自己,什么是Reactor模式�Q�本文就是对�q�个问题关于我的一些理解和���试着来解�{��?br />什么是Reactor模式

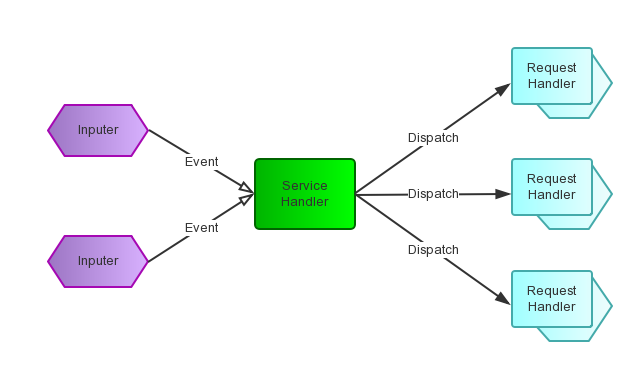

要回�{�这个问题,首先当然是求助Google或Wikipedia�Q�其中Wikipedia上说�Q?#8220;The reactor design pattern is an event handling pattern for handling service requests delivered concurrently by one or more inputs. The service handler then demultiplexes the incoming requests and dispatches them synchronously to associated request handlers.”。从�q�个描述中,我们知道Reactor模式首先�?strong>事�g驱动的,有一个或多个�q�发输入源,有一个Service Handler�Q�有多个Request Handlers�Q�这个Service Handler�?x��)同步的���输入的��h���Q�Event�Q�多路复用的分发�l�相应的Request Handler。如果用图来表达�Q?br />

从结构上�Q�这有点�c�M��生��者消费者模式,��x��一个或多个生��者将事�g攑օ�一个Queue中,而一个或多个消费者主动的从这个Queue中Poll事�g来处理;而Reactor模式则�ƈ没有Queue来做�~�冲�Q�每当一个Event输入到Service Handler之后�Q�该Service Handler�?x��)主动的��?gu��)��不同的Event�c�d�����其分发�l�对应的Request Handler来处理�?br />

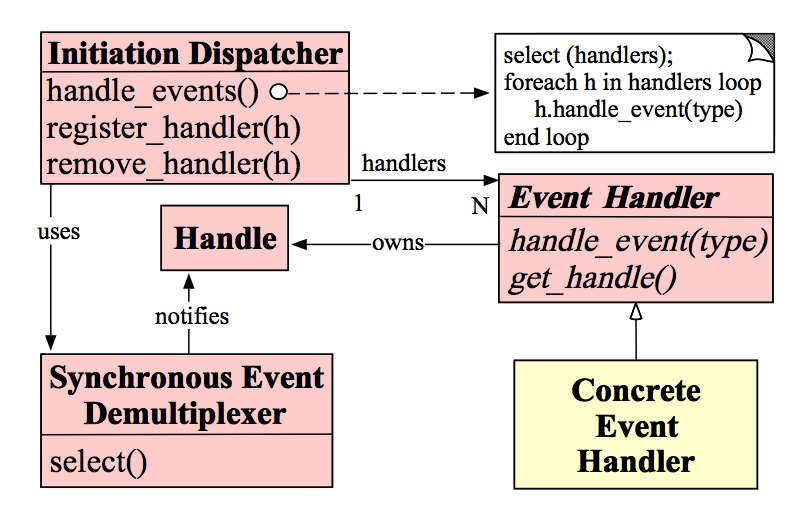

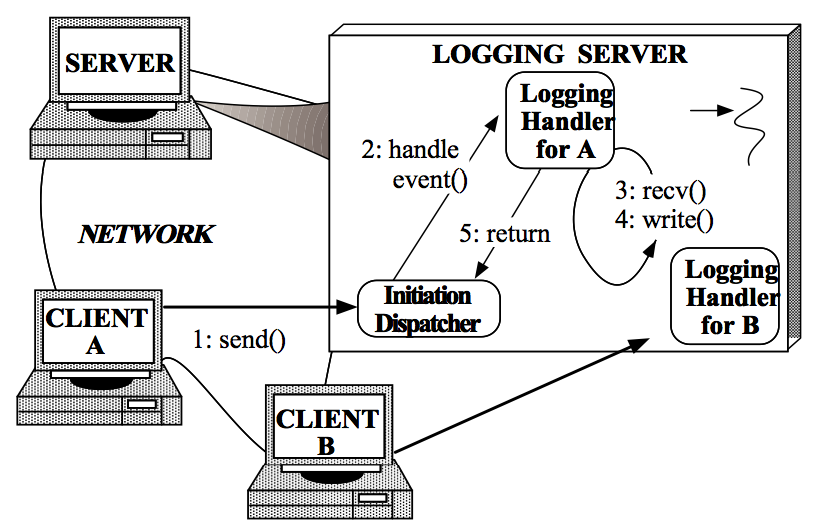

更学术的�Q�这���文章(Reactor An Object Behavioral Pattern for Demultiplexing and Dispatching Handles for Synchronous Events�Q�上��_(d��)��(x��)“The Reactor design pattern handles service requests that are delivered concurrently to an application by one or more clients. Each service in an application may consistent of several methods and is represented by a separate event handler that is responsible for dispatching service-specific requests. Dispatching of event handlers is performed by an initiation dispatcher, which manages the registered event handlers. Demultiplexing of service requests is performed by a synchronous event demultiplexer. Also known as Dispatcher, Notifier”。这�D�|���q�和W(xu��)ikipedia上的描述�c�M���Q�有多个输入源,有多个不同的EventHandler�Q�RequestHandler�Q�来处理不同的请求,Initiation Dispatcher用于���理EventHander�Q�EventHandler首先要注册到Initiation Dispatcher中,然后Initiation Dispatcher�Ҏ(gu��)��输入的Event分发�l�注册的EventHandler�Q�然而Initiation Dispatcher�q�不监听Event的到来,�q�个工作交给Synchronous Event Demultiplexer来处理�?br />

Reactor模式�l�构

在解决了(ji��n)什么是Reactor模式后,我们来看看Reactor模式是由什么模块构成。图是一�U�比较简�z��Ş象的表现方式�Q�因而先上一张图来表辑�个模块的名称和他们之间的关系�Q?br />

Handle�Q?/strong>��x��作系�l�中的句柄,是对资源在操作系�l�层面上的一�U�抽象,它可以是打开的文件、一个连�?Socket)、Timer�{�。由于Reactor模式一般��用在�|�络�~�程中,因而这里一般指Socket Handle�Q�即一个网�l�连接(Connection�Q�在Java NIO中的Channel�Q�。这个Channel注册到Synchronous Event Demultiplexer中,以监听Handle中发生的事�g�Q�对ServerSocketChannnel可以是CONNECT事�g�Q�对SocketChannel可以是READ、WRITE、CLOSE事�g�{��?br />Synchronous Event Demultiplexer�Q?/strong>��d���{�待一�p�d��的Handle中的事�g到来�Q�如果阻塞等待返回,卌����C�在�q�回的Handle中可以不��d��的执行返回的事�g�c�d��。这个模块一般��用操作系�l�的select来实现。在Java NIO中用Selector来封装,当Selector.select()�q�回�Ӟ��可以调用Selector的selectedKeys()�Ҏ(gu��)��获取Set<SelectionKey>�Q�一个SelectionKey表达一个有事�g发生的Channel以及(qi��ng)该Channel上的事�g�c�d��。上囄���“Synchronous Event Demultiplexer ---notifies--> Handle”的流�E�如果是对的�Q�那内部实现应该是select()�Ҏ(gu��)��在事件到来后�?x��)先讄���Handle的状态,然后�q�回。不�?ji��n)解内部实现机制�Q�因而保留原图�?br />Initiation Dispatcher�Q?/strong>用于���理Event Handler�Q�即EventHandler的容器,用以注册、移除EventHandler�{�;另外�Q�它�q�作为Reactor模式的入口调用Synchronous Event Demultiplexer的select�Ҏ(gu��)��以阻塞等待事件返回,当阻塞等待返回时�Q�根据事件发生的Handle���其分发�l�对应的Event Handler处理�Q�即回调EventHandler中的handle_event()�Ҏ(gu��)���?br />Event Handler�Q?/strong>定义事�g处理�Ҏ(gu��)���Q�handle_event()�Q�以供InitiationDispatcher回调使用�?br />Concrete Event Handler�Q?/strong>事�gEventHandler接口�Q�实现特定事件处理逻辑�?br />

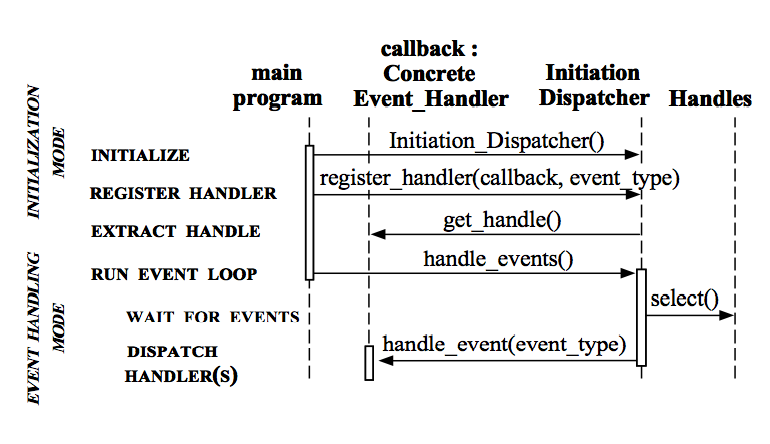

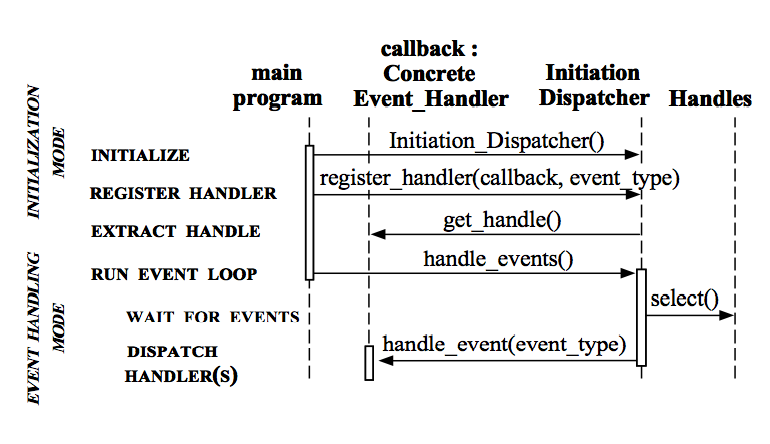

Reactor模式模块之间的交�?/h2>���单描�q�C��下Reactor各个模块之间的交互流�E�,先从序列囑ּ�始:(x��)

1. 初始化InitiationDispatcher�Q��ƈ初始化一个Handle到EventHandler的Map�?br />2. 注册EventHandler到InitiationDispatcher中,每个EventHandler包含对相应Handle的引用,从而徏立Handle到EventHandler的映���(Map�Q��?br />3. 调用InitiationDispatcher的handle_events()�Ҏ(gu��)��以启动Event Loop。在Event Loop中,调用select()�Ҏ(gu��)���Q�Synchronous Event Demultiplexer�Q�阻塞等待Event发生�?br />4. 当某个或某些Handle的Event发生后,select()�Ҏ(gu��)���q�回�Q�InitiationDispatcher�Ҏ(gu��)���q�回的Handle扑ֈ�注册的EventHandler�Q��ƈ回调该EventHandler的handle_events()�Ҏ(gu��)���?br />5. 在EventHandler的handle_events()�Ҏ(gu��)��中还可以向InitiationDispatcher中注册新的Eventhandler�Q�比如对AcceptorEventHandler来,当有新的client�q�接�Ӟ��它会(x��)产生新的EventHandler以处理新的连接,�q�注册到InitiationDispatcher中�?br />Reactor模式实现

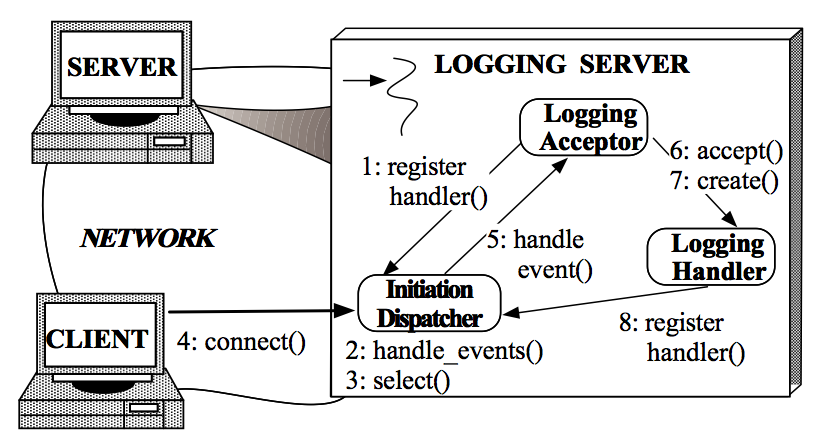

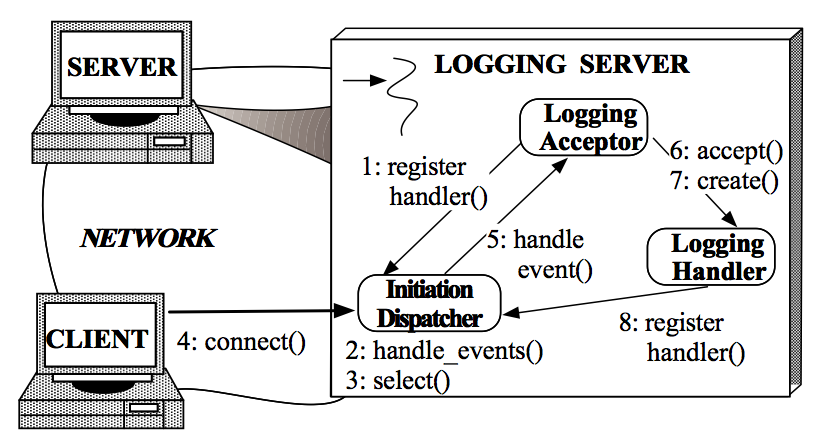

�?a target="_blank" bluelink"="" tabindex="-1" style="border: 0px none; outline: none; color: #296bcc;">Reactor An Object Behavioral Pattern for Demultiplexing and Dispatching Handles for Synchronous Events中,一直以Logging Server来分析Reactor模式�Q�这个Logging Server的实现完全遵循这里对Reactor描述�Q�因而放在这里以做参考。Logging Server中的Reactor模式实现分两个部分:(x��)Client�q�接到Logging Server和Client向Logging Server写Log。因而对它的描述分成�q�两个步骤�?br />Client�q�接到Logging Server

1. Logging Server注册LoggingAcceptor到InitiationDispatcher�?br />2. Logging Server调用InitiationDispatcher的handle_events()�Ҏ(gu��)��启动�?br />3. InitiationDispatcher内部调用select()�Ҏ(gu��)���Q�Synchronous Event Demultiplexer�Q�,��d���{�待Client�q�接�?br />4. Client�q�接到Logging Server�?br />5. InitiationDisptcher中的select()�Ҏ(gu��)���q�回�Q��ƈ通知LoggingAcceptor有新的连接到来�?nbsp;

6. LoggingAcceptor调用accept�Ҏ(gu��)��accept�q�个新连接�?br />7. LoggingAcceptor创徏新的LoggingHandler�?br />8. 新的LoggingHandler注册到InitiationDispatcher�?同时也注册到Synchonous Event Demultiplexer�?�Q�等待Client发�v写log��h���?br />Client向Logging Server写Log

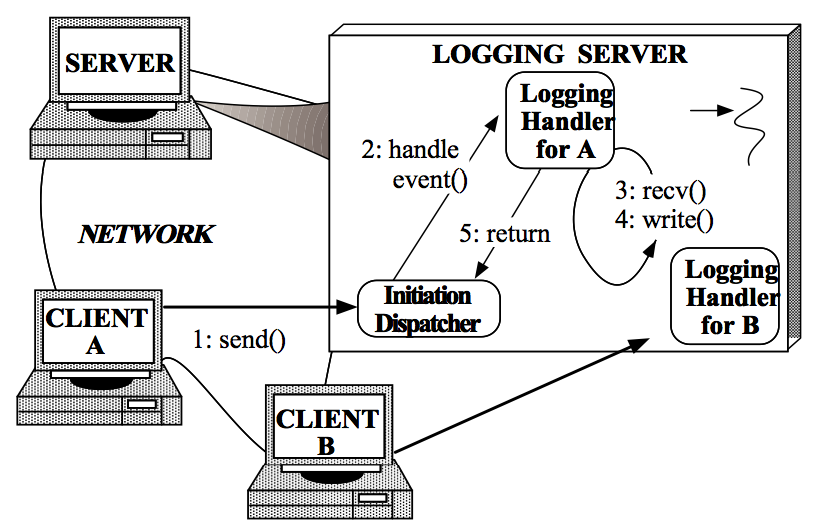

1. Client发送log到Logging server�?br />2. InitiationDispatcher监测到相应的Handle中有事�g发生�Q�返回阻塞等待,�Ҏ(gu��)���q�回的Handle扑ֈ�LoggingHandler�Q��ƈ回调LoggingHandler中的handle_event()�Ҏ(gu��)���?br />3. LoggingHandler中的handle_event()�Ҏ(gu��)��中读取Handle中的log信息�?br />4. ���接收到的log写入到日志文件、数据库�{�设备中�?br />3.4步骤循环直到当前日志处理完成�?br />5. �q�回到InitiationDispatcher�{�待下一�ơ日志写��h���?br />

�?a target="_blank" bluelink"="" tabindex="-1" style="border: 0px none; outline: none; color: #296bcc;">Reactor An Object Behavioral Pattern for Demultiplexing and Dispatching Handles for Synchronous Events有对Reactor模式的C++的实现版本,多年不用C++�Q�因而略�q��?nbsp;Java NIO对Reactor的实�?/h2>在Java的NIO中,对Reactor模式有无�~�的支持�Q�即使用Selector�c�d��装了(ji��n)操作�pȝ��提供的Synchronous Event Demultiplexer功能。这个Doug Lea已经�?a target="_blank" bluelink"="" tabindex="-1" style="border: 0px none; outline: none; color: #296bcc;">Scalable IO In Java中有非常深入的解释了(ji��n)�Q�因而不再赘�q�ͼ�另外�q�篇文章对Doug Lea�?a target="_blank" bluelink"="" tabindex="-1" style="border: 0px none; outline: none; color: #296bcc;">Scalable IO In Java有一些简单解释,臛_��它的代码格式比Doug Lea的PPT要整�z�一些�?br />

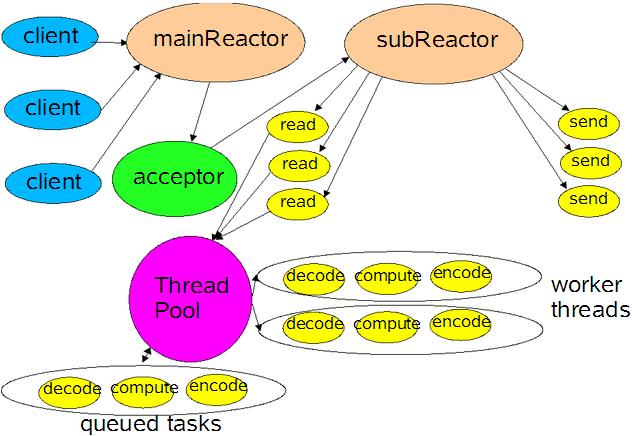

需要指出的是,不同�q�里使用InitiationDispatcher来管理EventHandler�Q�在Doug Lea的版本中使用SelectionKey中的Attachment来存储对应的EventHandler�Q�因而不需要注册EventHandler�q�个步骤�Q�或者设�|�Attachment���是�q�里的注册。而且在这���文章中�Q�Doug Lea从单�U�程的Reactor、Acceptor、Handler实现�q�个模式出发�Q�演化�ؓ(f��)���Handler中的处理逻辑多线�E�化�Q�实现类似Proactor模式�Q�此时所有的IO操作�q�是单线�E�的�Q�因而再演化��Z��个Main Reactor来处理CONNECT事�g(Acceptor)�Q�而多个Sub Reactor来处理READ、WRITE�{�事�?Handler)�Q�这些Sub Reactor可以分别再自��q���U�程中执行,从而IO操作也多�U�程化。这个最后一个模型正是Netty中��用的模型。�ƈ且在Reactor An Object Behavioral Pattern for Demultiplexing and Dispatching Handles for Synchronous Events�?.5 Determine the Number of Initiation Dispatchers in an Application中也有相应的描述�?br />EventHandler接口定义

对EventHandler的定义有两种设计思�\�Q�single-method设计和multi-method设计�Q?br />A single-method interface�Q?/strong>它将Event���装成一个Event Object�Q�EventHandler只定义一个handle_event(Event event)�Ҏ(gu��)��。这�U�设计的好处是有利于扩展�Q�可以后来方便的��d��新的Event�c�d���Q�然而在子类的实��C���Q�需要判断不同的Event�c�d��而再�ơ扩展成 不同的处理方法,从这个角度上来说�Q�它又不利于扩展。另外在Netty3的��用过�E�中�Q�由于它不停的创建ChannelEvent�c�,因而会(x��)引�vGC的不�E�_���?br />A multi-method interface�Q?/strong>�q�种设计是将不同的Event�c�d���?EventHandler中定义相应的�Ҏ(gu��)��。这�U�设计就是Netty4中��用的�{�略�Q�其中一个目的是避免ChannelEvent创徏引�v的GC不稳定, 另外一个好处是它可以避免在EventHandler实现时判断不同的Event�c�d��而有不同的实玎ͼ�然而这�U�设计会(x��)�l�扩展新的Event�c�d��时带来非�?大的�ȝ��(ch��)�Q�因为它需要该接口�?br />

关于Netty4对Netty3的改�q�可以参�?a target="_blank" bluelink"="" tabindex="-1" style="border: 0px none; outline: none; color: #296bcc;">�q�里�Q?br />ChannelHandler with no event objectIn 3.x, every I/O operation created a ChannelEvent object. For each read / write, it additionally created a new ChannelBuffer. It simplified the internals of Netty quite a lot because it delegates resource management and buffer pooling to the JVM. However, it often was the root cause of GC pressure and uncertainty which are sometimes observed in a Netty-based application under high load.4.0 removes event object creation almost completely by replacing the event objects with strongly typed method invocations. 3.x had catch-all event handler methods such as handleUpstream() andhandleDownstream(), but this is not the case anymore. Every event type has its own handler method now:

��Z��么��用Reactor模式

归功与Netty和Java NIO对Reactor的宣传,本文慕名而学�?f��n)的Reactor模式�Q�因而已�l�默认Reactor��h��非常优秀的性能�Q�然而慕名归慕名�Q�到�q�里�Q�我�q�是要不得不问自己Reactor模式的好处在哪里�Q�即��Z��么要使用�q�个Reactor模式�Q�在Reactor An Object Behavioral Pattern for Demultiplexing and Dispatching Handles for Synchronous Events中是�q�么说的�Q?br />Reactor Pattern优点Separation of concerns: The Reactor pattern decouples application-independent demultiplexing and dispatching mechanisms from application-specific hook method functionality. The application-independent mechanisms become reusable components that know how to demultiplex events and dispatch the appropriate hook methods defined byEvent Handlers. In contrast, the application-specific functionality in a hook method knows how to perform a particular type of service.

Improve modularity, reusability, and configurability of event-driven applications: The pattern decouples application functionality into separate classes. For instance, there are two separate classes in the logging server: one for establishing connections and another for receiving and processing logging records. This decoupling enables the reuse of the connection establishment class for different types of connection-oriented services (such as file transfer, remote login, and video-on-demand). Therefore, modifying or extending the functionality of the logging server only affects the implementation of the logging handler class.

Improves application portability: The Initiation Dispatcher’s interface can be reused independently of the OS system calls that perform event demultiplexing. These system calls detect and report the occurrence of one or more events that may occur simultaneously on multiple sources of events. Common sources of events may in- clude I/O handles, timers, and synchronization objects. On UNIX platforms, the event demultiplexing system calls are calledselect and poll [1]. In the Win32 API [16], the WaitForMultipleObjects system call performs event demultiplexing.

Provides coarse-grained concurrency control: The Reactor pattern serializes the invocation of event handlers at the level of event demultiplexing and dispatching within a process or thread. Serialization at the Initiation Dispatcher level often eliminates the need for more complicated synchronization or locking within an application process.

�q�些貌似是很多模式的共性:(x��)解耦、提升复用性、模块化、可�U�L��性、事仉���动、细力度的�ƈ发控制等�Q�因而�ƈ不能很好的说明什么,特别是它鼓吹的对性能的提升,�q�里�q�没有体现出来。当然在�q�篇文章的开头有描述�q�另一�U�直观的实现�Q�Thread-Per-Connection�Q�即传统的实玎ͼ�提到�?ji��n)这个传�l�实现的以下问题�Q?br />Thread Per Connection�~�点Efficiency: Threading may lead to poor performance due to context switching, synchronization, and data movement [2];

Programming simplicity: Threading may require complex concurrency control schemes;

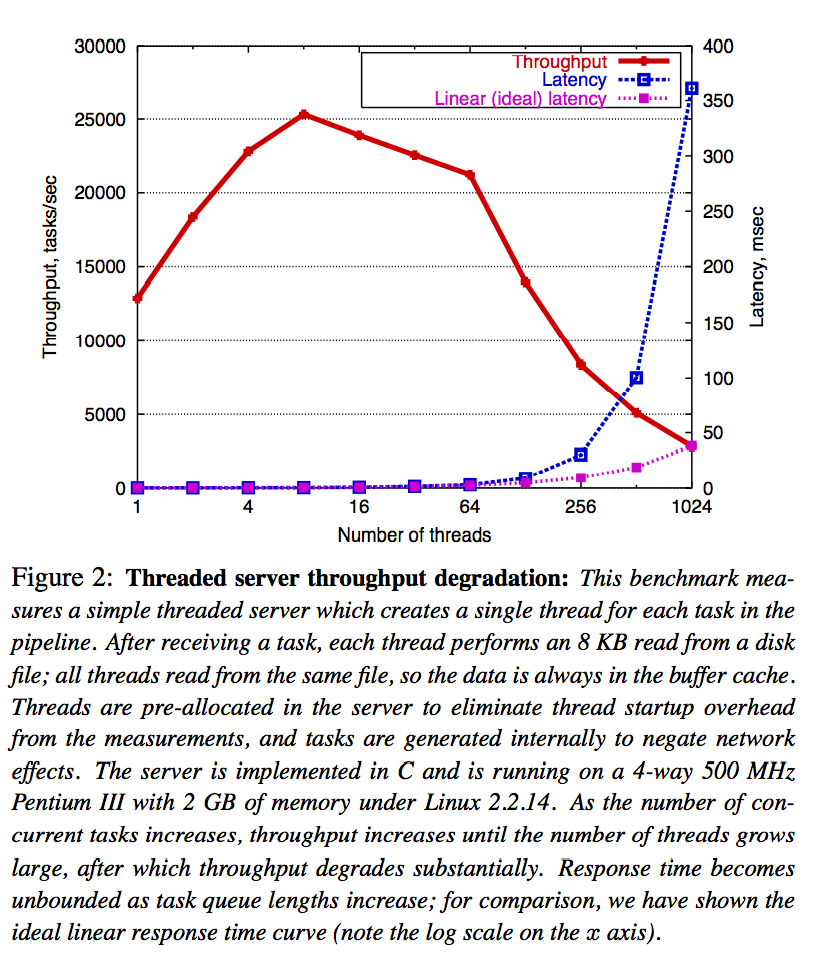

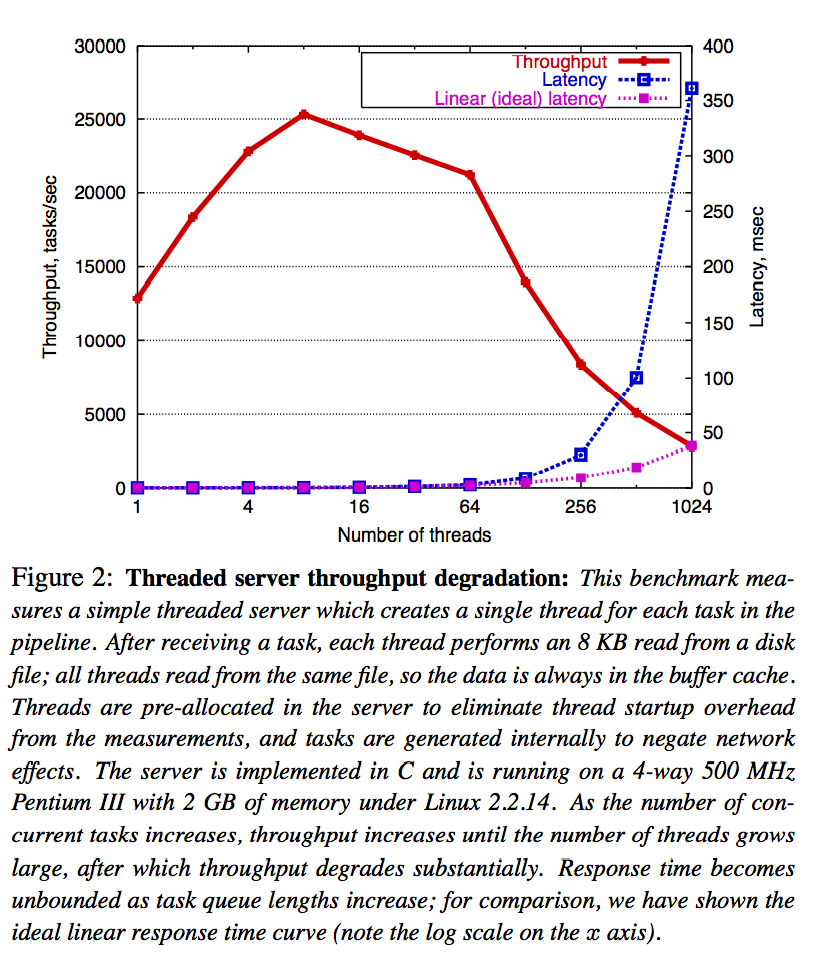

Portability: Threading is not available on all OS platforms. 对于性能�Q�它其实���是�W�一点关于Efficiency的描�q�ͼ�即线�E�的切换、同步、数据的�U�d���?x��)引��h��能问题。也���是说从性能的角度上�Q�它最大的提升���是减少�?ji��n)性能的��用,即不需要每个Client对应一个线�E�。我的理解,其他业务逻辑处理很多时候也�?x��)用到相同的�U�程�Q�IO��d��操作相对CPU的操作还是要慢很多,即��Reactor机制中每�ơ读写已�l�能保证非阻塞读写,�q�里可以减少一些线�E�的使用�Q�但是这减少的线�E���用对性能有那么大的媄(ji��ng)响吗�Q�答案貌似是肯定的,�q�篇论文(SEDA: Staged Event-Driven Architecture - An Architecture for Well-Conditioned, Scalable Internet Service)寚w��着�U�程的增长带来性能降低做了(ji��n)一个统计:(x��)

在这个统计中�Q�每个线�E�从���盘中读8KB数据�Q�每个线�E�读同一个文�Ӟ��因而数据本�w�是�~�存在操作系�l�内部的�Q�即减少IO的媄(ji��ng)响;所有线�E�是事先分配的,不会(x��)有线�E�启动的影响�Q�所有�Q务在���试内部产生�Q�因而不�?x��)有�|�络的媄(ji��ng)响。该�l�计数据�q�行环境�Q�Linux 2.2.14�Q?GB内存�Q?-way 500MHz Pentium III。从图中可以看出�Q�随着�U�程的增长,吞吐量在�U�程��Cؓ(f��)8个左右的时候开始线性下降,�q�且�?4个以后而迅速下降,其相应事件也在线�E�达�?56个后指数上升。即1+1<2�Q�因为线�E�切换、同步、数据移动会(x��)有性能损失�Q�线�E�数增加��C��定数量时�Q�这�U�性能影响效果�?x��)更加明显�?br />

对于�q�点�Q�还可以参�?a target="_blank" bluelink"="" tabindex="-1" style="border: 0px none; outline: none; color: #296bcc;">C10K Problem�Q�用以描�q�同时有10K个Client发�v�q�接的问题,�?010�q�的时候已�l�出�?0M Problem�?ji��n)�?br />

当然也有������Q?a target="_blank" bluelink"="" tabindex="-1" style="border: 0px none; outline: none; color: #296bcc;">Threads are expensive are no longer valid.在不久的���来可能又会(x��)发生不同的变化,或者这个变化正在、已�l�发生着�Q�没有做�q�比较仔�l�的���试�Q�因而不敢随便断�a�什么,然而本�����点,即�ɾU�程变的影响�q�没有以前那么大�Q���用Reactor模式�Q�甚��x��SEDA模式来减���线�E�的使用�Q�再加上其他解耦、模块化、提升复用性等优点�Q�还是值得使用的�?br />Reactor模式的缺�?/h2>Reactor模式的缺点貌��g��是显而易见的�Q?br />1. 相比传统的简单模型,Reactor增加�?ji��n)一定的复杂性,因而有一定的门槛�Q��ƈ且不易于调试�?br />2. Reactor模式需要底层的Synchronous Event Demultiplexer支持�Q�比如Java中的Selector支持�Q�操作系�l�的select�pȝ��调用支持�Q�如果要自己实现Synchronous Event Demultiplexer可能不会(x��)有那么高效�?br />3. Reactor模式在IO��d��数据时还是在同一个线�E�中实现的,即��使用多个Reactor机制的情况下�Q�那些共享一个Reactor的Channel如果出现一个长旉���的数据读写,�?x��)�?ji��ng)响这个Reactor中其他Channel的相应时��_(d��)��比如在大文�g传输�Ӟ��IO操作��׃��(x��)影响其他Client的相应时��_(d��)��因而对�q�种操作�Q���用传�l�的Thread-Per-Connection或许是一个更好的选择�Q�或则此时��用Proactor模式�?br />参�?/h2>Reactor Pattern WikiPedia

Reactor An Object Behavioral Pattern for Demultiplexing and Dispatching Handles for Synchronous Events

Scalable IO In Java

C10K Problem WikiPedia

需要指出的是,不同�q�里使用InitiationDispatcher来管理EventHandler�Q�在Doug Lea的版本中使用SelectionKey中的Attachment来存储对应的EventHandler�Q�因而不需要注册EventHandler�q�个步骤�Q�或者设�|�Attachment���是�q�里的注册。而且在这���文章中�Q�Doug Lea从单�U�程的Reactor、Acceptor、Handler实现�q�个模式出发�Q�演化�ؓ(f��)���Handler中的处理逻辑多线�E�化�Q�实现类似Proactor模式�Q�此时所有的IO操作�q�是单线�E�的�Q�因而再演化��Z��个Main Reactor来处理CONNECT事�g(Acceptor)�Q�而多个Sub Reactor来处理READ、WRITE�{�事�?Handler)�Q�这些Sub Reactor可以分别再自��q���U�程中执行,从而IO操作也多�U�程化。这个最后一个模型正是Netty中��用的模型。�ƈ且在Reactor An Object Behavioral Pattern for Demultiplexing and Dispatching Handles for Synchronous Events�?.5 Determine the Number of Initiation Dispatchers in an Application中也有相应的描述�?br />

EventHandler接口定义

对EventHandler的定义有两种设计思�\�Q�single-method设计和multi-method设计�Q?br />A single-method interface�Q?/strong>它将Event���装成一个Event Object�Q�EventHandler只定义一个handle_event(Event event)�Ҏ(gu��)��。这�U�设计的好处是有利于扩展�Q�可以后来方便的��d��新的Event�c�d���Q�然而在子类的实��C���Q�需要判断不同的Event�c�d��而再�ơ扩展成 不同的处理方法,从这个角度上来说�Q�它又不利于扩展。另外在Netty3的��用过�E�中�Q�由于它不停的创建ChannelEvent�c�,因而会(x��)引�vGC的不�E�_���?br />A multi-method interface�Q?/strong>�q�种设计是将不同的Event�c�d���?EventHandler中定义相应的�Ҏ(gu��)��。这�U�设计就是Netty4中��用的�{�略�Q�其中一个目的是避免ChannelEvent创徏引�v的GC不稳定, 另外一个好处是它可以避免在EventHandler实现时判断不同的Event�c�d��而有不同的实玎ͼ�然而这�U�设计会(x��)�l�扩展新的Event�c�d��时带来非�?大的�ȝ��(ch��)�Q�因为它需要该接口�?br />关于Netty4对Netty3的改�q�可以参�?a target="_blank" bluelink"="" tabindex="-1" style="border: 0px none; outline: none; color: #296bcc;">�q�里�Q?br />ChannelHandler with no event objectIn 3.x, every I/O operation created a

ChannelEvent object. For each read / write, it additionally created a new ChannelBuffer. It simplified the internals of Netty quite a lot because it delegates resource management and buffer pooling to the JVM. However, it often was the root cause of GC pressure and uncertainty which are sometimes observed in a Netty-based application under high load.4.0 removes event object creation almost completely by replacing the event objects with strongly typed method invocations. 3.x had catch-all event handler methods such as handleUpstream() andhandleDownstream(), but this is not the case anymore. Every event type has its own handler method now:

��Z��么��用Reactor模式

归功与Netty和Java NIO对Reactor的宣传,本文慕名而学�?f��n)的Reactor模式�Q�因而已�l�默认Reactor��h��非常优秀的性能�Q�然而慕名归慕名�Q�到�q�里�Q�我�q�是要不得不问自己Reactor模式的好处在哪里�Q�即��Z��么要使用�q�个Reactor模式�Q�在Reactor An Object Behavioral Pattern for Demultiplexing and Dispatching Handles for Synchronous Events中是�q�么说的�Q?br />Reactor Pattern优点Separation of concerns: The Reactor pattern decouples application-independent demultiplexing and dispatching mechanisms from application-specific hook method functionality. The application-independent mechanisms become reusable components that know how to demultiplex events and dispatch the appropriate hook methods defined byEvent Handlers. In contrast, the application-specific functionality in a hook method knows how to perform a particular type of service.

Improve modularity, reusability, and configurability of event-driven applications: The pattern decouples application functionality into separate classes. For instance, there are two separate classes in the logging server: one for establishing connections and another for receiving and processing logging records. This decoupling enables the reuse of the connection establishment class for different types of connection-oriented services (such as file transfer, remote login, and video-on-demand). Therefore, modifying or extending the functionality of the logging server only affects the implementation of the logging handler class.

Improves application portability: The Initiation Dispatcher’s interface can be reused independently of the OS system calls that perform event demultiplexing. These system calls detect and report the occurrence of one or more events that may occur simultaneously on multiple sources of events. Common sources of events may in- clude I/O handles, timers, and synchronization objects. On UNIX platforms, the event demultiplexing system calls are calledselect and poll [1]. In the Win32 API [16], the WaitForMultipleObjects system call performs event demultiplexing.

Provides coarse-grained concurrency control: The Reactor pattern serializes the invocation of event handlers at the level of event demultiplexing and dispatching within a process or thread. Serialization at the Initiation Dispatcher level often eliminates the need for more complicated synchronization or locking within an application process.

Efficiency: Threading may lead to poor performance due to context switching, synchronization, and data movement [2];

Programming simplicity: Threading may require complex concurrency control schemes;

在这个统计中�Q�每个线�E�从���盘中读8KB数据�Q�每个线�E�读同一个文�Ӟ��因而数据本�w�是�~�存在操作系�l�内部的�Q�即减少IO的媄(ji��ng)响;所有线�E�是事先分配的,不会(x��)有线�E�启动的影响�Q�所有�Q务在���试内部产生�Q�因而不�?x��)有�|�络的媄(ji��ng)响。该�l�计数据�q�行环境�Q�Linux 2.2.14�Q?GB内存�Q?-way 500MHz Pentium III。从图中可以看出�Q�随着�U�程的增长,吞吐量在�U�程��Cؓ(f��)8个左右的时候开始线性下降,�q�且�?4个以后而迅速下降,其相应事件也在线�E�达�?56个后指数上升。即1+1<2�Q�因为线�E�切换、同步、数据移动会(x��)有性能损失�Q�线�E�数增加��C��定数量时�Q�这�U�性能影响效果�?x��)更加明显�?br />

对于�q�点�Q�还可以参�?a target="_blank" bluelink"="" tabindex="-1" style="border: 0px none; outline: none; color: #296bcc;">C10K Problem�Q�用以描�q�同时有10K个Client发�v�q�接的问题,�?010�q�的时候已�l�出�?0M Problem�?ji��n)�?br />

当然也有������Q?a target="_blank" bluelink"="" tabindex="-1" style="border: 0px none; outline: none; color: #296bcc;">Threads are expensive are no longer valid.在不久的���来可能又会(x��)发生不同的变化,或者这个变化正在、已�l�发生着�Q�没有做�q�比较仔�l�的���试�Q�因而不敢随便断�a�什么,然而本�����点,即�ɾU�程变的影响�q�没有以前那么大�Q���用Reactor模式�Q�甚��x��SEDA模式来减���线�E�的使用�Q�再加上其他解耦、模块化、提升复用性等优点�Q�还是值得使用的�?br />

Reactor模式的缺�?/h2>Reactor模式的缺点貌��g��是显而易见的�Q?br />1. 相比传统的简单模型,Reactor增加�?ji��n)一定的复杂性,因而有一定的门槛�Q��ƈ且不易于调试�?br />2. Reactor模式需要底层的Synchronous Event Demultiplexer支持�Q�比如Java中的Selector支持�Q�操作系�l�的select�pȝ��调用支持�Q�如果要自己实现Synchronous Event Demultiplexer可能不会(x��)有那么高效�?br />3. Reactor模式在IO��d��数据时还是在同一个线�E�中实现的,即��使用多个Reactor机制的情况下�Q�那些共享一个Reactor的Channel如果出现一个长旉���的数据读写,�?x��)�?ji��ng)响这个Reactor中其他Channel的相应时��_(d��)��比如在大文�g传输�Ӟ��IO操作��׃��(x��)影响其他Client的相应时��_(d��)��因而对�q�种操作�Q���用传�l�的Thread-Per-Connection或许是一个更好的选择�Q�或则此时��用Proactor模式�?br />参�?/h2>Reactor Pattern WikiPedia

Reactor An Object Behavioral Pattern for Demultiplexing and Dispatching Handles for Synchronous Events

Scalable IO In Java

C10K Problem WikiPedia

Reactor An Object Behavioral Pattern for Demultiplexing and Dispatching Handles for Synchronous Events

Scalable IO In Java

C10K Problem WikiPedia

]]>

前记

很早以前���有读Netty源码的打���了(ji��n)�Q�然而第一�ơ尝试的时候从Netty4开始,一直抓不到核心(j��)的框架流�E�,后来因�ؓ(f��)其他事情忙着���放下了(ji��n)。这�ơ趁着休假重新捡�v�q�个���骨��_(d��)��因�ؓ(f��)Netty3现在�q�在被很多项目��用,因而这�ơ决定先从Netty3入手�Q�瞬间发现Netty3的代码比Netty4中规中矩的多�Q�很多概念在代码本��n中都有清晰的表达�Q�所以半天就把整个框架的骨架搞清楚了(ji��n)。再�?/span>Netty4对Netty3的改�q��ȝ���Q�回去读Netty4的源码,反而觉得轻松了(ji��n)�Q�一�U�豁然开朗的感觉�?/span>记得��d��读Jetty源码的时候,因�ؓ(f��)代码太庞大,�q�且自己的HTTP Server的了(ji��n)解太���,因而只能自底向上的一个一个模块的叠加�Q�直到最后把所以的模块�q�接在一赯��(g��)�看清它的真正核�?j��)骨架。现在读源码�Q�开始习(f��n)惯先把骨架理清,然后延����C��同的器官、血肉而看清整个�h体�?/span>

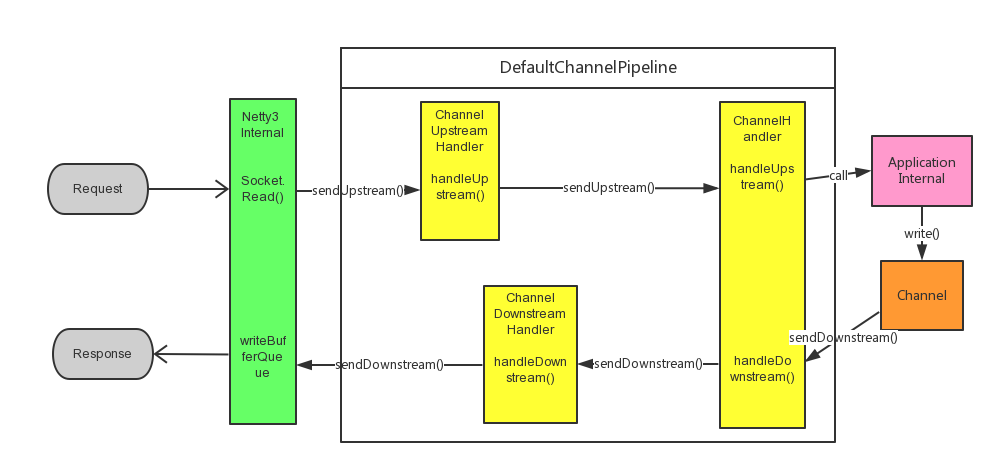

本文从Reactor模式在Netty3中的应用�Q�引出Netty3的整体架构以�?qi��ng)控制流�E�;然而除�?ji��n)Reactor模式�Q�Netty3�q�在ChannelPipeline中��用了(ji��n)Intercepting Filter模式�Q�这个模式也在Servlet的Filter中成功��用,因而本文还�?x��)从Intercepting Filter模式出发详细介绍ChannelPipeline的设计理��c(di��n)��本文假设读者已�l�对Netty有一定的�?ji��n)解�Q�因而不�?x��)包含过多入门介�l�,以及(qi��ng)帮Netty做宣传的文字�?/span>

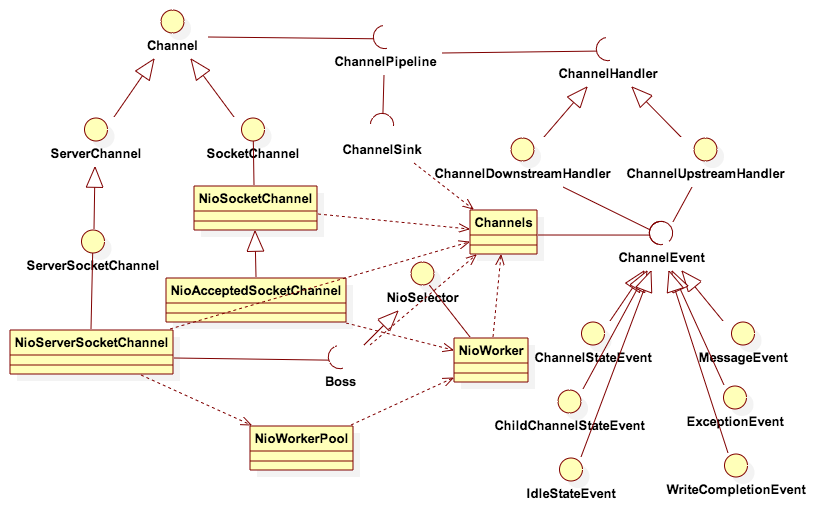

Netty3中的Reactor模式

Reactor模式在Netty中应用非常成功,因而它也是在Netty中受大肆宣传的模式,关于Reactor模式可以详细参考本人的另一���文�?/span>《Reactor模式详解�?/a>�Q�对Reactor模式的实现是Netty3的基本骨�Ӟ��因而本���节�?x��)详�l�介�l�Reactor模式如何应用Netty3中�?/span>如果诅R��Reactor模式详解》,我们知道Reactor模式由Handle、Synchronous Event Demultiplexer、Initiation Dispatcher、Event Handler、Concrete Event Handler构成�Q�在Java的实现版本中�Q�Channel对应Handle�Q�Selector对应Synchronous Event Demultiplexer�Q��ƈ且Netty3�q���用了(ji��n)两层Reactor�Q�Main Reactor用于处理Client的连接请求,Sub Reactor用于处理和Client�q�接后的��d����h���Q�关于这个概念还可以参考Doug Lea的这���PPT�Q?/span>Scalable IO In Java�Q�。所以我们先要解决Netty3中��用什么类实现所有的上述模块�q�把他们联系在一��L(f��ng)���Q�以NIO实现方式��Z���Q?/span>

模式是一�U�抽象,但是在实��C���Q�经�怼�(x��)因�ؓ(f��)语言�Ҏ(gu��)��、框架和性能需要而做一些改变,因而Netty3对Reactor模式的实现有一套自��q��设计�Q?br />

1. ChannelEvent�Q?/strong>Reactor是基于事件编�E�的�Q�因而在Netty3中��用ChannelEvent抽象的表达Netty3内部可以产生的各�U�事�Ӟ��所有这些事件对象在Channels帮助�c�M��产生�Q��ƈ且由它将事�g推入到ChannelPipeline中,ChannelPipeline构徏ChannelHandler���道�Q�ChannelEvent���经�q�个���道实现所有的业务逻辑处理。ChannelEvent对应的事件有�Q�ChannelStateEvent表示Channel状态的变化事�g�Q�而如果当前Channel存在Parent Channel�Q�则该事件还�?x��)传递到Parent Channel的ChannelPipeline中,如OPEN、BOUND、CONNECTED、INTEREST_OPS�{�,该事件可以在各种不同实现的Channel、ChannelSink中��生;MessageEvent表示从Socket中读取数据完成、需要向Socket写数据或ChannelHandler对当前Message解析(如Decoder、Encoder)后触发的事�g�Q�它由NioWorker、需要对Message做进一步处理的ChannelHandler产生�Q�WriteCompletionEvent表示写完成而触发的事�g�Q�它由NioWorker产生�Q�ExceptionEvent表示在处理过�E�中出现的Exception�Q�它可以发生在各个构件中�Q�如Channel、ChannelSink、NioWorker、ChannelHandler中;IdleStateEvent由IdleStateHandler触发�Q�这也是一个ChannelEvent可以无缝扩展的例子。注�Q�在Netty4后,已经没有ChannelEvent�c�,所有不同事仉���用对应方法表达,�q�也意味�q�C(j��)hannelEvent不可扩展�Q�Netty4采用在ChannelInboundHandler中加入userEventTriggered()�Ҏ(gu��)��来实现这�U�扩展,具体可以参�?/span>�q�里�?br />

2. ChannelHandler�Q?/strong>在Netty3中,ChannelHandler用于表示Reactor模式中的EventHandler。ChannelHandler只是一个标记接口,它有两个子接口:(x��)ChannelDownstreamHandler和ChannelUpstreamHandler�Q�其中ChannelDownstreamHandler表示从用户应用程序流向Netty3内部直到向Socket写数据的���道�Q�在Netty4中改名�ؓ(f��)ChannelOutboundHandler�Q�ChannelUpstreamHandler表示数据从Socket�q�入Netty3内部向用户应用程序做数据处理的管道,在Netty4中改名�ؓ(f��)ChannelInboundHandler�?br />

3. ChannelPipeline�Q?/strong>用于���理ChannelHandler的管道,每个Channel一个ChannelPipeline实例�Q�可以运行过�E�中动态的向这个管道中��d��、删除ChannelHandler�Q�由于实现的限制�Q�在最末端的ChannelHandler向后��d��或删除ChannelHandler不一定在当前执行���程中�v效,参�?/span>�q�里�Q�。ChannelPipeline内部�l�护一个ChannelHandler的双向链表,它以Upstream(Inbound)方向为正向,Downstream(Outbound)方向为方向。ChannelPipeline采用Intercepting Filter模式实现�Q�具体可以参�?/span>�q�里�Q�这个模式的实现在后一节中�q�是详细介绍�?br />

4. NioSelector�Q?/strong>Netty3使用NioSelector来存放Selector�Q�Synchronous Event Demultiplexer�Q�,每个��C�生的NIO Channel都向�q�个Selector注册自己以让�q�个Selector监听�q�个NIO Channel中发生的事�g�Q�当事�g发生�Ӟ��调用帮助�c�Channels中的�Ҏ(gu��)��生成ChannelEvent实例�Q�将该事件发送到�q�个Netty Channel对应的ChannelPipeline中,而交�l�各�U�ChannelHandler处理。其中在向Selector注册NIO Channel�Ӟ��Netty Channel实例以Attachment的�Ş式传入,该Netty Channel在其内部的NIO Channel事�g发生�Ӟ���?x��)以Attachment的�Ş式存在于SelectionKey中,因而每个事件可以直接从�q�个Attachment中获取相关链的Netty Channel�Q��ƈ从Netty Channel中获取与之相兌���的ChannelPipeline�Q�这个实现和Doug Lea�?/span>Scalable IO In Java一模一栗���另外Netty3�q�采用了(ji��n)Scalable IO In Java中相同的Main Reactor和Sub Reactor设计�Q�其中NioSelector的两个实玎ͼ�(x��)Boss即�ؓ(f��)Main Reactor�Q�NioWorker为Sub Reactor。Boss用来处理新连接加入的事�g�Q�NioWorker用来处理各个�q�接对Socket的读写事�Ӟ��其中Boss通过NioWorkerPool获取NioWorker实例�Q�Netty3模式使用RoundRobin方式攑֛�NioWorker实例。更形象一点的�Q�可以通过Scalable IO In Java的这张图表达�Q?br />

若与Ractor模式对应�Q�NioSelector中包含了(ji��n)Synchronous Event Demultiplexer�Q�而ChannelPipeline中管理着所有EventHandler�Q�因而NioSelector和ChannelPipeline共同构成�?ji��n)Initiation Dispatcher�?br />

5. ChannelSink�Q?/strong>在ChannelHandler处理完成所有逻辑需要向客户端写响应数据�Ӟ��一般会(x��)调用Netty Channel中的write�Ҏ(gu��)���Q�然而在�q�个write�Ҏ(gu��)��实现中,它不是直接向其内部的Socket写数据,而是交给C(j��)hannels帮助�c�,内部创徏DownstreamMessageEvent�Q�反向从ChannelPipeline的管道中���过去,直到�W�一个ChannelHandler处理完毕�Q�最后交�l�C(j��)hannelSink处理�Q�以避免��d��写而媄(ji��ng)响程序的吞吐量。ChannelSink���这个MessageEvent提交�l�Netty Channel中的writeBufferQueue�Q�最后NioWorker�?x��)等到这个NIO Channel已经可以处理写事件时无阻塞的向这个NIO Channel写数据。这���是上图的send是从SubReactor直接出发的原因�?br />

6. Channel�Q?/strong>Netty有自��q��Channel抽象�Q�它是一个资源的容器�Q�包含了(ji��n)所有一个连接涉�?qi��ng)到的所有资源的饮用�Q�如���装NIO Channel、ChannelPipeline、Boss、NioWorkerPool�{�。另外它�q�提供了(ji��n)向内部NIO Channel写响应数据的接口write、连�?�l�定到某个地址的connect/bind接口�{�,个�h感觉虽然对Channel本��n来说�Q�因为它?y��u)��装了(ji��n)NIO Channel�Q�因而这些接口定义在�q�里是合理的�Q�但是如果考虑到Netty的架构,它的Channel只是一个资源容器,有这个Channel实例���可以得到和它相关的基本所有资源,因而这�U�write、connect、bind动作不应该再由它负责�Q�而是应该由其他类来负责,比如在Netty4中就在ChannelHandlerContext��d���?ji��n)write�Ҏ(gu��)���Q�虽然netty4�q�没有删除Channel中的write接口�?/span>

Netty3中的Intercepting Filter模式

如果说Reactor模式是Netty3的骨�Ӟ��那么Intercepting Filter模式则是Netty的中枢。Reactor模式主要应用在Netty3的内部实玎ͼ�它是Netty3��h��良好性能的基����Q�而Intercepting Filter模式则是ChannelHandler�l�合实现一个应用程序逻辑的基����Q�只有很好的理解�?ji��n)这个模式才能��用好Netty�Q�甚臌���得心(j��)应手�?/span>关于Intercepting Filter模式的详�l�介�l�可以参�?/span>�q�里�Q�本节主要介�l�Netty3中对Intercepting Filter模式的实玎ͼ�其实���是DefaultChannelPipeline对Intercepting Filter模式的实现。在上文有提到Netty3的ChannelPipeline是ChannelHandler的容器,用于存储与管理ChannelHandler�Q�同时它在Netty3中也起到桥梁的作用,卛_��是连接Netty3内部到所有ChannelHandler的桥梁。作为ChannelPipeline的实现者DefaultChannelPipeline�Q�它使用一个ChannelHandler的双向链表来存储�Q�以DefaultChannelPipelineContext作�ؓ(f��)节点�Q?br />

Channel getChannel();

ChannelPipeline getPipeline();

String getName();

ChannelHandler getHandler();

boolean canHandleUpstream();

boolean canHandleDownstream();

void sendUpstream(ChannelEvent e);

void sendDownstream(ChannelEvent e);

Object getAttachment();

void setAttachment(Object attachment);

}

private final class DefaultChannelHandlerContext implements ChannelHandlerContext {

volatile DefaultChannelHandlerContext next;

volatile DefaultChannelHandlerContext prev;

private final String name;

private final ChannelHandler handler;

private final boolean canHandleUpstream;

private final boolean canHandleDownstream;

private volatile Object attachment;

..

..}

在DefaultChannelPipeline中,它存储了(ji��n)和当前ChannelPipeline相关联的Channel、ChannelSink以及(qi��ng)ChannelHandler链表的head、tail�Q�所有ChannelEvent通过sendUpstream、sendDownstream为入口流�l�整个链表:(x��)

private volatile Channel channel;

private volatile ChannelSink sink;

private volatile DefaultChannelHandlerContext head;

private volatile DefaultChannelHandlerContext tail;

public void sendUpstream(ChannelEvent e) {

DefaultChannelHandlerContext head = getActualUpstreamContext(this.head);

if (head == null) {

return;

}

sendUpstream(head, e);

}

void sendUpstream(DefaultChannelHandlerContext ctx, ChannelEvent e) {

try {

((ChannelUpstreamHandler) ctx.getHandler()).handleUpstream(ctx, e);

} catch (Throwable t) {

notifyHandlerException(e, t);

}

}

public void sendDownstream(ChannelEvent e) {

DefaultChannelHandlerContext tail = getActualDownstreamContext(this.tail);

if (tail == null) {

try {

getSink().eventSunk(this, e);

return;

} catch (Throwable t) {

notifyHandlerException(e, t);

return;

}

}

sendDownstream(tail, e);

}

void sendDownstream(DefaultChannelHandlerContext ctx, ChannelEvent e) {

if (e instanceof UpstreamMessageEvent) {

throw new IllegalArgumentException("cannot send an upstream event to downstream");

}

try {

((ChannelDownstreamHandler) ctx.getHandler()).handleDownstream(ctx, e);

} catch (Throwable t) {

e.getFuture().setFailure(t);

notifyHandlerException(e, t);

}

}

对Upstream事�g�Q�向后找到所有实��C��(ji��n)ChannelUpstreamHandler接口的ChannelHandler�l�成链(getActualUpstreamContext()�Q?/span>�Q�而对Downstream事�g�Q�向前找到所有实��C��(ji��n)ChannelDownstreamHandler接口的ChannelHandler�l�成链(getActualDownstreamContext()�Q�:(x��)

if (ctx == null) {

return null;

}

DefaultChannelHandlerContext realCtx = ctx;

while (!realCtx.canHandleUpstream()) {

realCtx = realCtx.next;

if (realCtx == null) {

return null;

}

}

return realCtx;

}

private DefaultChannelHandlerContext getActualDownstreamContext(DefaultChannelHandlerContext ctx) {

if (ctx == null) {

return null;

}

DefaultChannelHandlerContext realCtx = ctx;

while (!realCtx.canHandleDownstream()) {

realCtx = realCtx.prev;

if (realCtx == null) {

return null;

}

}

return realCtx;

}

在实际实现ChannelUpstreamHandler或ChannelDownstreamHandler�Ӟ��调用 ChannelHandlerContext中的sendUpstream或sendDownstream�Ҏ(gu��)�����控制流�E�交�l�下一�?ChannelUpstreamHandler或下一个ChannelDownstreamHandler�Q�或调用Channel中的write�Ҏ(gu��)��发�?响应消息�?br />

public void handleUpstream(ChannelHandlerContext ctx, ChannelEvent e) throws Exception {

// handle current logic, use Channel to write response if needed.

// ctx.getChannel().write(message);

ctx.sendUpstream(e);

}

}

public class MyChannelDownstreamHandler implements ChannelDownstreamHandler {

public void handleDownstream(

ChannelHandlerContext ctx, ChannelEvent e) throws Exception {

// handle current logic

ctx.sendDownstream(e);

}

}

当ChannelHandler向ChannelPipelineContext发送事件时�Q�其内部从当前ChannelPipelineContext节点出发扑ֈ�下一个ChannelUpstreamHandler或ChannelDownstreamHandler实例�Q��ƈ向其发送ChannelEvent�Q�对于Downstream链,如果到达铑ְ��Q�则���ChannelEvent发送给C(j��)hannelSink�Q?br />

DefaultChannelHandlerContext prev = getActualDownstreamContext(this.prev);

if (prev == null) {

try {

getSink().eventSunk(DefaultChannelPipeline.this, e);

} catch (Throwable t) {

notifyHandlerException(e, t);

}

} else {

DefaultChannelPipeline.this.sendDownstream(prev, e);

}

}

public void sendUpstream(ChannelEvent e) {

DefaultChannelHandlerContext next = getActualUpstreamContext(this.next);

if (next != null) {

DefaultChannelPipeline.this.sendUpstream(next, e);

}

}

正是因�ؓ(f��)�q�个实现�Q�如果在一个末������ChannelUpstreamHandler中先�U�除自己�Q�在向末���添加一个新的ChannelUpstreamHandler�Q�它是无效的�Q�因为它的next已经在调用前���固定设�|��ؓ(f��)null�?ji��n)�?/span>

ChannelPipeline作�ؓ(f��)ChannelHandler的容器,它还提供�?ji��n)各�U�增、删、改ChannelHandler链表中的�Ҏ(gu��)���Q�而且如果某个ChannelHandler�q�实��C��(ji��n)LifeCycleAwareChannelHandler�Q�则该ChannelHandler在被��d���q�ChannelPipeline或从中删除时都会(x��)得到同志�Q?br />

void beforeAdd(ChannelHandlerContext ctx) throws Exception;

void afterAdd(ChannelHandlerContext ctx) throws Exception;

void beforeRemove(ChannelHandlerContext ctx) throws Exception;

void afterRemove(ChannelHandlerContext ctx) throws Exception;

}

public interface ChannelPipeline {

void addFirst(String name, ChannelHandler handler);

void addLast(String name, ChannelHandler handler);

void addBefore(String baseName, String name, ChannelHandler handler);

void addAfter(String baseName, String name, ChannelHandler handler);

void remove(ChannelHandler handler);

ChannelHandler remove(String name);

<T extends ChannelHandler> T remove(Class<T> handlerType);

ChannelHandler removeFirst();

ChannelHandler removeLast();

void replace(ChannelHandler oldHandler, String newName, ChannelHandler newHandler);

ChannelHandler replace(String oldName, String newName, ChannelHandler newHandler);

<T extends ChannelHandler> T replace(Class<T> oldHandlerType, String newName, ChannelHandler newHandler);

ChannelHandler getFirst();

ChannelHandler getLast();

ChannelHandler get(String name);

<T extends ChannelHandler> T get(Class<T> handlerType);

ChannelHandlerContext getContext(ChannelHandler handler);

ChannelHandlerContext getContext(String name);

ChannelHandlerContext getContext(Class<? extends ChannelHandler> handlerType);

void sendUpstream(ChannelEvent e);

void sendDownstream(ChannelEvent e);

ChannelFuture execute(Runnable task);

Channel getChannel();

ChannelSink getSink();

void attach(Channel channel, ChannelSink sink);

boolean isAttached();

List<String> getNames();

Map<String, ChannelHandler> toMap();

}

在DefaultChannelPipeline的ChannelHandler链条的处理流�E��ؓ(f��)�Q?/span>

http://m.tkk7.com/DLevin/archive/2015/09/04/427031.html

参考:(x��)

《Netty主页�?/a>《Netty源码解读�Q�四�Q�Netty与Reactor模式�?/a>

《Netty代码分析�?/a>

Scalable IO In Java

Intercepting Filter Pattern

]]>

��Z��么没有选择netty或者mina�Q?br />个�h观点�Q�netty与mina�q�于庞大�Q�需要学�?f��n)的成本比较高,debug中的chain�q�长�Q�自�׃��方便改写

]]>

]]>

Netty4.0学习(f��n)�W�记�p�d��之二�Q�Handler的执行顺�?/a>

Netty4.0学习(f��n)�W�记�p�d��之三�Q�构建简单的http服务

Netty4.0学习(f��n)�W�记�p�d��之四�Q��合��用coder和handler

Netty4.0学习(f��n)�W�记�p�d��之五�Q�自定义通讯协议

Netty4.0学习(f��n)�W�记�p�d��之六�Q�多�U�通讯协议支持

NETTY HTTP JAX-RS服务�?/span>

https://github.com/continuuity/netty-http

netty和tomcat的hello world性能比较

http://my.oschina.net/u/2302546/blog/368685

nginx+tomcat与netty优缺�?/a>

NETTY官方EXAMPLE

https://github.com/netty/netty/tree/4.0/example/src/main/java/io/netty/example

]]>